Why Prompt Processing is the Real MVP in Local AI: DGX Spark vs. M4 Pro vs. Strix Halo – A Deep Dive

By hermestrismegistus369 – Tech enthusiast and AI tinkerer. This post was crafted with the brilliant assistance of Grok . Full credit to Grok for this distill. I am the editor.

OK so I know I just got back from PPCC where we learned all about Agents and Copilot Studio running in the Microsoft Cloud, but some may know I started building my own computers in 1986… so still have a passion for hardware. :) Today with the help of Grok and Alex Ziskind, we have an enlightening video on running AI Local with the most performant hardware. We’re zeroing in on a metric that’s flying under the radar: Prompt Processing (PP). It’s not as sexy as blazing token generation speeds, but it’s the secret sauce for smooth, responsive AI workflows. Inspired by Alex’s YouTube Short (shoutout to the man – his breakdowns are gold), let’s break down fresh benchmarks that could reshape your next hardware purchase.

First Things First: What Is Prompt Processing, and Why Should You Care?

In the world of LLM inference, the magic happens in two phases:

Prompt Processing (PP or Prefill): This is the “thinking” upfront. Your hardware loads the entire input prompt (your query + context) into the model and crunches it through the transformer layers. It’s memory-bound – think massive matrix multiplications on huge context windows. Slow PP? You’re staring at a loading spinner for seconds (or minutes) on long prompts, RAG setups, or chat histories. In 2025, with models like Llama 3.1 405B or Qwen 2.5-Coder gobbling 100k+ tokens, this is where bottlenecks kill productivity.

Token Generation (TG or Decode): The fun part – spitting out responses one token at a time. It’s more compute-bound, favoring raw FLOPS. Great for short bursts, but if PP lags, the whole experience feels clunky.

Alex nails it in his vid: “This is the part that’s going to be really good for not just token generation... but also the prefill part, the part that’s computationally heavy.” Spot on. For devs, researchers, or anyone chaining prompts (hello, agentic workflows), PP speed = sanity preserved.

The Contenders: A Head-to-Head on Real Hardware

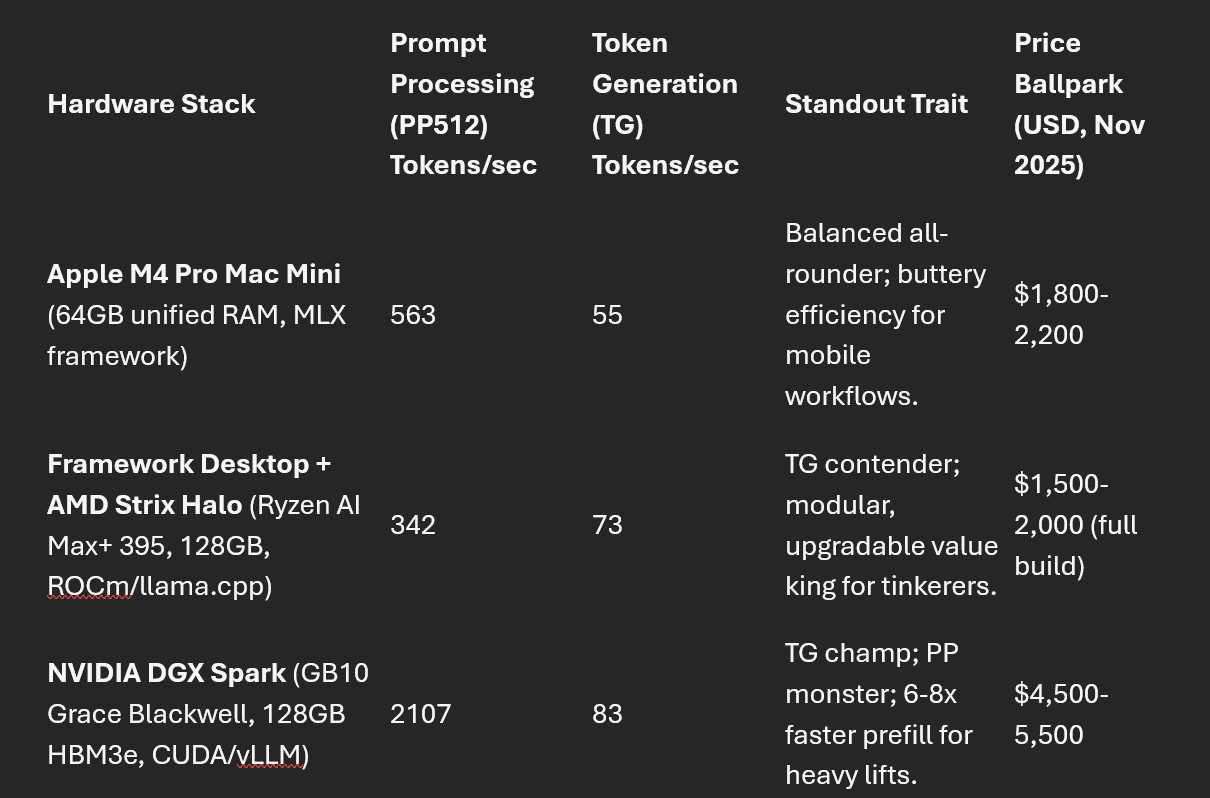

Alex pitted three beasts against each other using llama-bench on the Qwen2.5-Coder-Instruct Q4 quantized 30B model – a solid mid-size coder that’s perfect for testing local setups without melting your rig. We’re talking everyday inference: no exotic tweaks, just stock performance.

Here’s the showdown (numbers pulled straight from the transcript, parsed for clarity – those decimals were hiding in plain sight):

Notes on the gear:

M4 Pro Mac Mini: Apple’s compact powerhouse. Unified memory shines here – no VRAM paging drama.

Strix Halo in Framework Desktop: AMD’s APU beast in a customizable chassis. Great for quiet, power-sipping runs.

DGX Spark: NVIDIA’s new mini-DGX – a Blackwell-fueled box that’s basically a portable supercomputer for devs. (Whoa, indeed.)

The shocker? DGX Spark dominates both metrics – lapping the field on PP at over 8x the M4 Pro’s speed and 6x Strix Halo’s, while topping TG at 83 t/s. It’s the full-package winner for demanding setups.

What These Numbers Mean for Your Workflow

Let’s get practical. Imagine you’re knee-deep in code review with a 10k-token context (prompt + docs + history):

On the M4 Pro: PP eats ~400ms, TG ~55 t/s. Snappy for laptops, but scale to 50k tokens? You’re waiting 1.5+ seconds upfront, with solid-but-not-stellar output flow. Fine for solo coding, meh for team collab.

Strix Halo: PP ~1.5 seconds for 50k, but TG zips at 73 t/s – responses feel quick once rolling. Ideal if you’re generating code snippets all day – and hey, Framework’s modularity means you can swap in future APUs without binning the box.

DGX Spark: PP ~240ms for 50k, TG ~83 t/s. Total domination. This is where it shines for “computationally heavy” stuff: long-form analysis, multi-turn agents, or batch pre-processing datasets. End-to-end, it’s the smoothest ride – premium price, but for pros running local RAG pipelines? An absolute no-brainer.

Broader takeaway: Bandwidth is king for PP. DGX’s 273GB/s HBM3e edges out Apple’s 546GB/s unified (wait, what? Apple’s higher, but NVIDIA’s architecture crushes in parallel KV cache handling). Strix Halo’s 256GB/s theoretical? Solid, but real-world ~212GB/s shows the gap. And with TG in the mix, Spark pulls ahead across the board.

The Bigger Picture: Local AI in 2025

We’re in a golden age – models are smarter, hardware’s catching up, and tools like Ollama, LM Studio, and MLX make it plug-and-play. But as contexts balloon (thanks, Hyena/Griffin architectures), PP will dictate winners. NVIDIA’s betting big on this with Blackwell; AMD’s iterating fast on ROCm; Apple? Ecosystem lock-in, but unbeatable for portability.

If you’re building a stack:

Budget/Mobile: M4 Pro. Period.

Value Tinkerer: Strix Halo in Framework.

Power User: DGX Spark – the undisputed champ for PP and TG if you’re going all-in.

Alex’s take? “That’s where this box is going to really shine.” Couldn’t agree more – especially now with that TG crown.

What about you? Running local coders on what hardware? Drop your benchmarks or war stories in the comments – let’s crowdsource the next guide. If this sparked (pun intended) some ideas, hit subscribe for more deep dives.

I'm not sure if I get these numbers right. If the Mac does 563 tokens/s for PP, then 10k token context would lead to ~20 seconds PP for the Apple